High-energy physics workloads can benefit from massive parallelism -- and as a matter of fact, the domain faces an increasing adoption of deep learning solutions. Take for example the newly-announced TrackML challenge [7], already running in Kaggle! This context motivates CERN to consider GPU provisioning in our OpenStack cloud, as computation accelerators, promising access to powerful GPU computing resources to developers and batch processing alike.

What are the options?

Given the nature of our workloads, our focus is on discrete PCI-E Nvidia cards, like the GTX1080Ti and the Tesla P100. There are 2 ways to provision these GPUs to VMs: PCI passthrough and virtualized GPU. The first method is not specific to GPUs, but applies to any PCI device. The device is claimed by a generic driver, VFIO, on the hypervisor (which cannot use it anymore) and exclusive access to it is given to a single VM [1]. Essentially, from the host’s perspective the VM becomes a userspace driver [2], while the VM sees the physical PCI device and can use normal drivers, expecting no functionality limitation and no performance overhead.

|

| Visualizing passthrough vs mdev vGPU [9] |

In fact, perhaps some “limitation in functionality” is warranted, so that the untrusted VM can’t do low-level hardware configuration changes on the passed-through device, like changing power settings or even its firmware! In fact, security-wise PCI passthrough leaves a lot to be desired. Apart from allowing the VM to change the device’s configuration, it might leave a possibility for side-channel attacks on the hypervisor (although we have not observed this, and a hardware “IOMMU” protects against DMA attacks from the passed-through device). Perhaps more importantly, the device’s state won’t be automatically reset after deallocating from a VM. In the case of a GPU, data from a previous use may persist on the device’s global memory when it is allocated to a new VM. The first concern may be mitigated by improving VFIO, while the latter, the issue of device reset or “cleanup”, provides a use case for a more general accelerator management framework in OpenStack -- the nascent Cyborg project may fit the bill.

Virtualized GPUs are a vendor-specific option, promising better manageability and alleviating the previous issues. Instead of having to pass through entire physical devices, we can split physical devices into virtual pieces on demand (well, almost on demand; there needs to be no vGPU allocated in order to change the split) and hand out a piece of GPU to any VM. This solution is indeed more elegant. In Intel and Nvidia’s case, virtualization is implemented as a software layer in the hypervisor, which provides “mediated devices” (mdev [3]), virtual slices of GPU that appear like virtual PCI devices to the host and can be given to the VMs individually. This requires a special vendor-specific driver on the hypervisor (Nvidia GRID, Intel GVT-g), unfortunately not yet supporting KVM. AMD is following a different path, implementing SR-IOV at a hardware level.

CERN’s implementation

PCI passthrough has been supported in Nova for several releases, so it was the first solution we tried. There is a guide in the OpenStack docs [4], as well as previous summit talks on the subject [1]. Once everything is configured, the users will see special VM flavors (“g1.large”), whose extra_specs field includes passthrough of a particular kind of gpu. For example, to deploy a GTX 1080Ti, we use the following configuration:

| |||

| |||

| |||

|

A detail here is that most GPUs appear as 2 pci devices, the VGA and the sound device, both of which must be passed through at the same time (they are in the same IOMMU group; basically an IOMMU group [6] is the smallest passable unit).

Our cloud was in Ocata at the time, using CellsV1, and there were a few hiccups, such as the Puppet modules not parsing an option syntax correctly (MulitStrOpt) and CellsV1 dropping the pci requests. For Puppet, we were simply missing some upstream commits [15]. From Pike on and in CellsV2, these issues shouldn’t exist. As soon as we had worked around them and puppetized our hypervisor configuration, we started offering cloud GPUs with PCI passthrough and evaluating the solution. We created a few GPU flavors, following the AWS example of keeping the amount of vCPUs the same as the corresponding normal flavors.

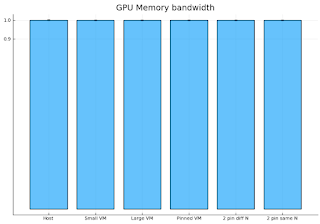

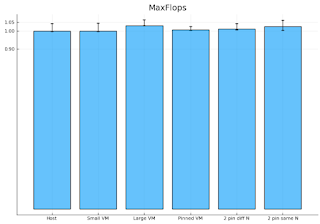

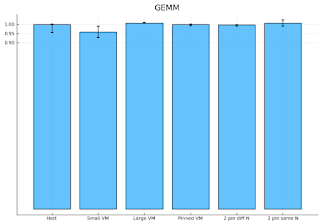

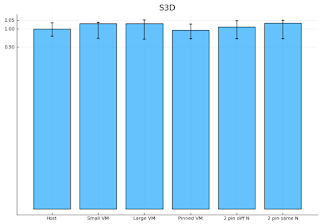

From the user’s perspective, there proved to be no functionality issues. CUDA applications, like TensorFlow, run normally; the users are very happy that they finally have exclusive access to their GPUs (there is good tenant isolation). And there is no performance penalty in the VM, as measured by the SHOC benchmark [5] -- admittedly quite old, we preferred this benchmark because it also measures low-level details, apart from just application performance.

From the cloud provider’s perspective, there’s a few issues. Apart from the potential security problems identified before, since the hypervisor has no control over the passed-through device, we can’t monitor the GPU. We can’t measure its actual utilization, or get warnings in case of critical events, like overheating.

| Normalized performance of VMs vs. hypervisor on some SHOC benchmarks. First 2: low-level gpu features, Last 2: gpu algorithms [8]. There are different test cases of VMs, to check if other parameters play a role. The “Small VM” has 2 vCPUs, “Large VM” has 4, “Pinned VM” has 2 pinned vCPUs (thread siblings), “2 pin diff N” and “2 pin same N” measure performance in 2 pinned VMs running simultaneously, in different vs the same NUMA nodes |

Virtualized GPU experiments

The allure of vGPUs amounts largely to finer-grained distribution of resources, less security concerns (debatable) and monitoring. Nova support for provisioning vGPUs is offered in Queens as an experimental feature. However, our cloud is running on KVM hypervisors (on CERN CentOS 7.4 [14]), which Nvidia does not support as of May 2018 (Nvidia GRID v6.0). When it does, the hypervisor will be able to split the GPU into vGPUs according to one of many possible profiles, such as in 4 or in 16 pieces. Libvirt then assigns these mdevs to VMs in a similar way to hostdevs (passthrough). Details are in the OpenStack docs at [16].

Despite this promise, it remains to be seen if virtual GPUs will turn out to be an attractive offering for us. This depends on vendors’ licensing costs (such as per VM pricing), which, for the compute-compatible offering, can be significant. Added to that is the fact that only a subset of standard CUDA is supported (not supported are the unified memory and “CUDA tools” [11], probably referring to tools like the Nvidia profiler). vGPUs are also oversubscribing the GPU’s compute resources, which can be seen in either a positive or negative light. On the one hand, this guarantees higher resource utilization, especially for bursting workloads, like developers. On the other hand, we may expect a lower quality of service [12].

And the road goes on...

Our initial cloud GPU offering is very limited, and we intend to gradually increase it. Before that, it will be important to address (or at least be conscious about) the security repercussions of PCI passthrough. But even more significant is to address GPU accounting in a straightforward manner, by enforcing quotas on GPU resources. So far we haven’t tested the case of GPU P2P, with multi-GPU VMs, which is supposed to be problematic [13].

Another direction we’ll be researching is offering GPU-enabled container clusters, backed by pci-passthrough VMs. It may be that, with this approach, we can emulate a behavior similar to vGPUs and circumvent some of the bigger problems with pci passthrough.

References

[1]: OVH presentation: https://www.youtube.com/watch?v=1tdvz3ejokM

[2]: VFIO description: https://www.kernel.org/doc/Documentation/vfio.txt

[3]: VFIO mediated devices (mdev): https://www.kernel.org/doc/Documentation/vfio-mediated-device.txt

[4]: Nova docs on PCI passthrough: https://docs.openstack.org/nova/latest/admin/pci-passthrough.html

[5]: SHOC benchmark suite: https://github.com/vetter/shoc

[6]: IOMMU groups: https://vfio.blogspot.ch/2014/08/iommu-groups-inside-and-out.html

[7]: TrackML challenge announcement: https://home.cern/about/updates/2018/05/are-you-trackml-challenge

[8]: S3D: https://github.com/vetter/shoc/wiki/S3d, https://www.olcf.ornl.gov/wp-content/themes/olcf/titan/Titan_BuiltForScience.pdf

[9]: Images taken from: http://www.linux-kvm.org/images/5/59/02x03-Neo_Jia_and_Kirti_Wankhede-vGPU_on_KVM-A_VFIO_based_Framework.pdf

[10]: Nvidia vGPU architecture: https://docs.nvidia.com/grid/latest/grid-vgpu-user-guide/index.html#architecture-grid-vgpu

[11]: CUDA Unified memory and tooling not supported on Nvidia vGPU: https://docs.nvidia.com/grid/latest/grid-vgpu-user-guide/index.html#features-grid-vgpu

[12]: AMD on vGPU QoS: https://pro.radeon.com/en/quality-of-service-amd-mxgpu/

[13]: GPU P2P doesn’t work: http://lists.openstack.org/pipermail/openstack-operators/2018-March/014988.html

[14]: CERN CentOS 7: https://linux.web.cern.ch/linux/centos7/

[15]: Missing Puppet commits: https://github.com/openstack/puppet-nova/commit/e7fe8c16ae873834ccf145b2bcbc62081a957241, https://github.com/openstack/puppet-nova/commit/c1a4ab211dd2322572349719379cd13c6f2abb9a

[16]: Adding vGPUs to guests: https://docs.openstack.org/nova/latest/admin/virtual-gpu.html