With the accelerator stopping over the CERN annual closure until mid March, this is a good period to be planning reconfiguration of compute resources such as the migration of our central batch system which schedules the jobs across the central compute resources to a new system based on HTCondor. The compute resources are heavily used but there is more flexibility to drain some parts in the quieter periods of the year when there is not 10PB/month coming from the detectors. However, this year we have had an unexpected additional task to deploy the fixes for the Meltdown and Spectre exploits across the centre.

The CERN environment is based on Scientific Linux CERN 6 and CentOS 7. The hypervisors are now entirely CentOS 7 based with guests of a variety of operating systems including Windows flavors and CERNVM. The campaign to upgrade involved a number of steps

- Assess the security risk

- Evaluate the performance impact

- Test the upgrade procedure and stability

- Plan the upgrade campaign

- Communicate with the users

- Execute the campaign

Security Risk

The CERN environment consists of a mixture of different services, with thousands of projects on the cloud, distributed across two data centres in Geneva and Budapest.

Two major risks were identified

- Services which provided the ability for end users to run their own programs along with others sharing the same kernel. Examples of this are the public login services and batch farms. Public login services provide an interactive Linux environment for physicists to log into from around the world, prepare papers, develop and debug applications and submit jobs to the central batch farms. The batch farms themselves provide 1000s of worker nodes processing the data from CERN experiments by farming event after event to free compute resources. Both of these environments are multi-user and allow end users to compile their own programs and thus were rated as high risk for the Meltdown exploit.

- The hypervisors provide support for a variety of different types of virtual machines. Different areas of the cloud provide access to different network domains or to compute optimised configurations. Many of these hypervisors will have VMs owned by different end users and therefore can be exposed to the Spectre exploits, even if the performance is such that exploiting the problem would take significant computing time.

There are a variety of different hypervisor configurations which we split down by processor type (in view of the Spectre microcode patches). Each of these needs independent performance and stability checks.

Microcode

|

Assessment

|

#HVs

|

Processor name(s)

|

06-3f-02

|

covered

|

3332

|

E5-2630 v3 @ 2.40GHz,E5-2640 v3 @ 2.60GHz

|

06-4f-01

|

covered

|

2460

|

E5-2630 v4 @ 2.20GHz, E5-2650 v4 @ 2.20GHz

|

06-3e-04

|

hopefully

|

1706

|

E5-2650 v2 @ 2.60GHz

|

??

|

unclear

|

427

|

CPU family: 21 Model: 1 Model name: AMD Opteron(TM) Processor 6276

Stepping: 2

|

06-2d-07

| unclear |

333

|

E5-2630L 0 @ 2.00GHz, E5-2650 0 @ 2.00GHz

|

06-2c-02

|

unlikely

|

168

|

E5645 @ 2.40GHz, L5640 @ 2.27GHz, X5660 @ 2.80GHz

|

These risks were explained by the CERN security team to the end users in their regular blogs.

Evaluating the performance impact

The High Energy Physics community uses a suite called HEPSPEC06 to benchmark compute resources. These are synthetic programs based on the C++ components of SPEC CPU2006 which match the instruction mix of the typical physics programs. With this benchmark, we have started to re-benchmark (the majority of) the CPU models we have in the data centres, both on the physical hosts and on the guests. The measured performance loss across all architectures tested so far is about 2.5% in HEPSPEC06 (a number also confirmed by by one of the LHC experiments using their real workloads) with a few cases approaching 7%. So for our physics codes, the effect of patching seems measurable, but much smaller than many expected.

Test the upgrade procedure and stability

With our environment based on CentOS and Scientific Linux, the deployment of the updates for Meltdown and Spectre were dependent on the upstream availability of the patches. These could be broken down into several parts

- Firmware for the processors - the microcode_ctl packages provide additional patches to protect against some parts of Spectre. This package proved very dynamic as new processor firmware was being added on a regular basis and it was not always clear when this needed to be applied, the package version would increase but it was not always that this included an update for the particular hardware type. Following through the Intel release notes, there were combinations such as "HSX C0(06-3f-02:6f) 3a->3b" which explains that the processor description 06-3f-02:6f is upgraded from release 0x3a to 0x3b. The fields are the CPU family, model and stepping from /proc/cpuinfo and the firmware level can be found at /sys/devices/system/cpu/cpu0/microcode/version. A simple script (spectre-cpu-microcode-checker.sh) was made available to the end users so they could check their systems and this was also used by the administrators to validate the central IT services.

- For the operating system, we used a second script (spectre-meltdown-checker.sh) which was derived from the upstream github code at https://github.com/speed47/spectre-meltdown-checker. The team maintaining this package were very responsive incorporating our patches so that other sites could benefit from the combined analysis.

Communication with the users

For the cloud, there are several resource consumers.

- IT service administrators who provide higher level functions on top of the CERN cloud. Examples include file transfer services, information systems, web frameworks and experiment workload management systems. While some are in the IT department, others are representatives of their experiments or supporters for online control systems such as those used to manage the accelerator infrastructure.

- End users consume cloud resources by asking for virtual machines and using them as personal working environments. Typical cases would be a MacOS user who needs a Windows desktop where they would create a Windows VM and use protocols such as RDP to access it when required.

The communication approach was as follows:

- A meeting was held to discuss the risks of exploits, the status of the operating systems and the plan for deployment across the production facilities. With a Q&A session, the major concerns raised were around potential impact on performance and tuning options.

- An e-mail was sent to all owners of virtual machine resources informing them of the upcoming interventions.

- CERN management was informed of the risks and the plan for deployment.

CERN uses ServiceNow to provide a service desk for tickets and a status board of interventions and incidents. A single entry was used to communicate the current plans and status so that all cloud consumers could go to a single place for the latest information.

Execute the campaign

With the accelerator starting up again in March and the risk of the exploits, the approach taken was to complete the upgrades to the infrastructure in January, leaving February to find any residual problems and resolve them. As the handling of the compute/batch part of the infrastructure was relatively straight forward (with only one service on top), we will focus in the following on the more delicate part of hypervisors running services supporting several thousand users in their daily work.

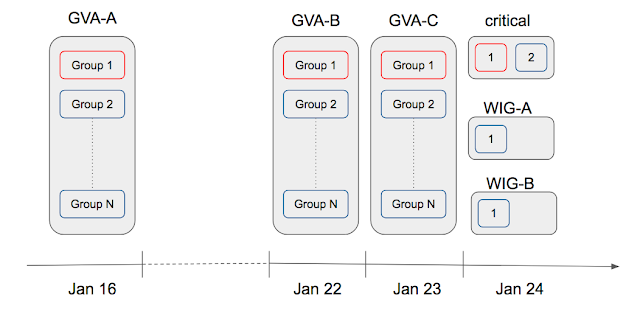

The layout of our infrastructure with its availability zones (AVZs) determined the overall structure and timeline of the upgrade. With effectively four AVZs in our data centre in Geneva and two AVZs for our remote resources in Budapest, we scheduled the upgrade for the services part of the resources over four days.

The main zones in Geneva were done one per day, with a break after the first one (GVA-A) in case there were unexpected difficulties to handle on the infrastructure or on the application side. The remaining zones were scheduled on consecutive days (GVA-B and GVA-C), the smaller ones (critical, WIG-A, WIG-B) in sequential order on the last day. This way we upgraded around 400 hosts with 4,000 guests per day.

Within each zone, hypervisors were divided into 'reboot groups' which were restarted and checked before the next group was handled. These groups were determined by the OpenStack cells underlying the corresponding AVZs. Since some services required to limit the window of service downtime, their hosting servers were moved to the special Group 1, the only one for which we could give a precise start time.

For each group several steps were performed:

As some of the hypervisors in the cloud had very long uptimes and this was the first time we systematically rebooted the whole infrastructure since the service went to full production about five years ago, we were not quite sure what kind issues to expect -- and in particular at which scale. To our relief, the problems encountered on the hosts hit less than 1% of the servers and included (in descending order of appearance)

The layout of our infrastructure with its availability zones (AVZs) determined the overall structure and timeline of the upgrade. With effectively four AVZs in our data centre in Geneva and two AVZs for our remote resources in Budapest, we scheduled the upgrade for the services part of the resources over four days.

The main zones in Geneva were done one per day, with a break after the first one (GVA-A) in case there were unexpected difficulties to handle on the infrastructure or on the application side. The remaining zones were scheduled on consecutive days (GVA-B and GVA-C), the smaller ones (critical, WIG-A, WIG-B) in sequential order on the last day. This way we upgraded around 400 hosts with 4,000 guests per day.

Within each zone, hypervisors were divided into 'reboot groups' which were restarted and checked before the next group was handled. These groups were determined by the OpenStack cells underlying the corresponding AVZs. Since some services required to limit the window of service downtime, their hosting servers were moved to the special Group 1, the only one for which we could give a precise start time.

For each group several steps were performed:

- install all relevant packages

- check the next kernel is the desired one

- reset the BMC (needed for some specific hardware to prevent boot problems)

- log the nova and ping state of all guests

- stop all alarming

- stop nova

- shut down all instances via virsh

- reboot the hosts

- ... wait ... then fix hosts which did not come back

- check running kernel and vulnerability status on the rebooted hosts

- check and fix potential issues with the guests

As some of the hypervisors in the cloud had very long uptimes and this was the first time we systematically rebooted the whole infrastructure since the service went to full production about five years ago, we were not quite sure what kind issues to expect -- and in particular at which scale. To our relief, the problems encountered on the hosts hit less than 1% of the servers and included (in descending order of appearance)

- hosts stuck in shutdown (solved by IPMI reset)

- libvirtd stuck after reboot (solved by another reboot)

- hosts without network connectivity (solved by another reboot)

- hosts stuck in grub during boot (solved by reinstalling grub)

On the guest side, virtual machines were mostly ok when the underlying hypervisor was ok as well.

A few additional cases included

A few additional cases included

- incomplete kernel upgrades, so the root partition could not be found (solved by booting back into an older kernel and reinstall the desired kernel)

- file system issues (solved by running file system repairs)

So, despite initial worries, we hit no major issues when rebooting the whole CERN cloud infrastructure!

Conclusions

While these kind of security issues do not arrive very often, the key parts of the campaign follow standard steps, namely assessing the risk, planning the update, communicating with the user community, execution and handling incomplete updates.

Using cloud availability zones to schedule the deployment allowed users to easily understand when there would be an impact on their virtual machines and encourages good practise to load balance resources.

References

- CERN security advisory for Meltdown / Spectre

- CERN IT Status Board

Authors

- Arne Wiebalck

- Jan Van Eldik

- Tim Bell