A combined meeting of the Swiss OpenStack and Rhone Alpes OpenStack user groups was held at CERN on Friday 6th December 2013. This is the 6th Swiss User Group meeting and the 2nd Rhone Alpes one.

Despite the cold and a number of long distance travelers, 85 people from banking, telecommunications, Academia/Research and IT companies gather to share OpenStack experiences across the region and get the latest news.

The day was split into two parts. In the morning, there was the opportunity for a visit to the ATLAS experiment, see a 3-D movie on how the experiment was built and visit the CERN public exhibition points at the Globe and Microcosm. Since the Large Hadron Collider is currently under going maintenance until 2015, the experimental areas are accessible for small groups with a guide.

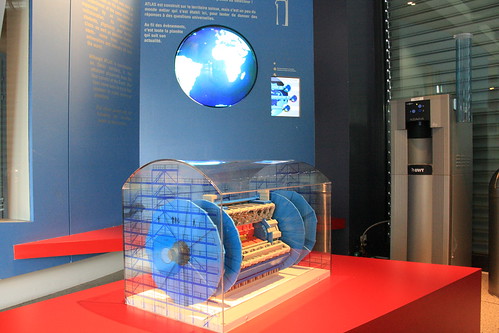

At the ATLAS control room, you could see a model of the detector

The real thing is slightly bigger and heavier... 100m underground, only one end is accessible currently from the viewing gallery.

The afternoon session presentations are available at the conference page.

After a quick introduction, I gave feedback on the latest board meeting in Hong Kong with topics such as the election process and the defcore discussion to answer the "What is OpenStack" question.

The following talk was an set of technical summaries of the Hong Kong summit from Belmiro, Patrick and Gergely. Belmiro covered the latest news on Nova, Glance and Cinder along with some slides from his deep dive talk on CERN's openstack cloud.

Patrick from Bull's xlcloud covered the latest news on Heat which is rapidly becoming a key part of the OpenStack environment as it not only provides a user facing service for orchestration but also is now a pre-requisite for other projects such as Trove, the database as a service.

In a good illustration of the difficulties of compatibility, the open office document failed to display the key slide on PowerPoint but Patrick covered the details while the PDF version was brought up. Heat currently supports AWS Cloud Formations but is now adding a native template language, HOT, to cover additional functions. The icehouse release will add more auto scaling features, integration with ceilometer and a move towards the TOSCA standard.

Gregely covered some of the user stories and the latest news on ceilometer as it starts to move into alarming on top of the existing metering function.

Alessandro then covered the online clouds at CERN which are opportunistically using the 1000s of servers attached to the CMS and ATLAS experiments when the LHC is not running. The aim is to be able to switch as fast as possible from the farm being used to filter the 1PB/s from the LHC to performing physics work. Current tests show it takes around 45 minutes to intantiate the VMs on the 1,400 hypervisors.

Jens-Christian gave a talk on the use of Ceph at SWITCH. Many of the aspects seem similar to the block storage that we are looking at within CERN's cloud. SWITCH are aiming a 1,000 core cluster to serve the Swiss academic community including dropbox, IaaS and app-store style services. It was particularly encouraging to see that SWITCH have been able to perform online upgrades between versions without problems.... the regular warning to be cautious with CephFS is also made, so using rbd rather than filesystem backed storage makes sense.

Martin gave us a detailed view on the options for geographically distributed clouds using OpenStack. This was intriguing on multiple levels in view of CERN's ongoing work with the community on federated identity along with some useful hints and tips on the different kinds of approaches. Martin converged onto using Regions to achieve the ultimate goals but there were several potential useful intermediate configurations such as cells which CERN is using extensively in the multi-data centre cloud with Budapest and Meyrin. I fully agree with Martin's perspective on the need for cells to become more than just a nova concept as we require similar functions in Glance and Cinder for the CERN use case. Martin had given the same talk in Paris on Thursday and was giving it again on Monday in Israel so he is doing a fine job in re-use of presentations.

Sergio described Elastic Cluster which is an open source tool for provisioning clusters for researchers. He illustrated the function with a youtube video which demonstrates the scaling of the cluster on top of a cloud infrastructure.

Finally, Dave Neary gave an introduction to Open Shift and how to deploy a Platform-as-a-Service solution. Using a simple git/ssh model, various PaaS instances can be deployed easily on top of an OpenStack cloud. The talk included a memorable demo where Dave showed the depth of his ruby skills but was rescued by the members of the audience and the application deployed to rounds of applause.

Many thanks to the CERN guides and administration for helping with the organisation, all of the attendees for coming and making it such a lively meeting and to Sven Michels and Belmiro Morerira for the photos.